Nvidia, a frontrunner in the development of artificial intelligence (AI) hardware, has sent ripples through the tech industry with the unveiling of its latest offering – the Blackwell B200 GPU. This announcement underscores Nvidia’s commitment to maintaining its leadership position in the highly competitive AI hardware market, as companies across the globe scramble to develop ever more powerful and efficient AI solutions.

This article delves into the technical specifications and capabilities of the Blackwell B200 GPU, exploring how it surpasses its predecessor and introduces advancements that promise to revolutionize the field of AI. We will also examine the broader implications of this unveiling, including its potential impact on the development of large language models (LLMs) and the future of Nvidia’s gaming graphics card lineup.

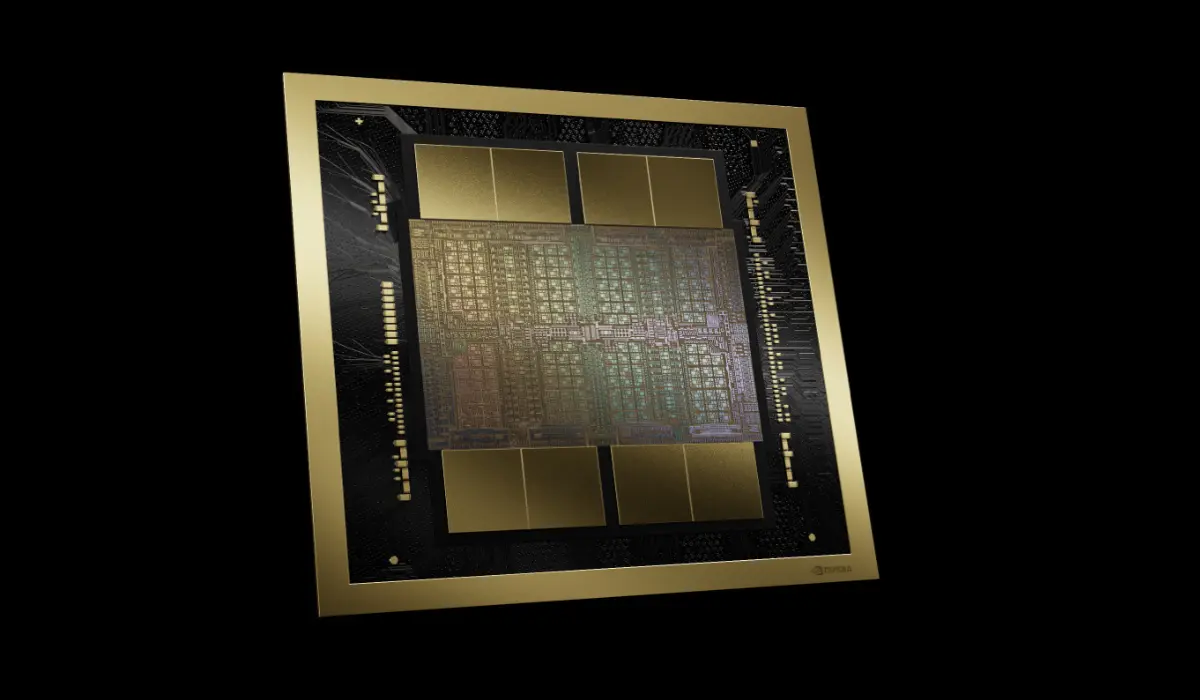

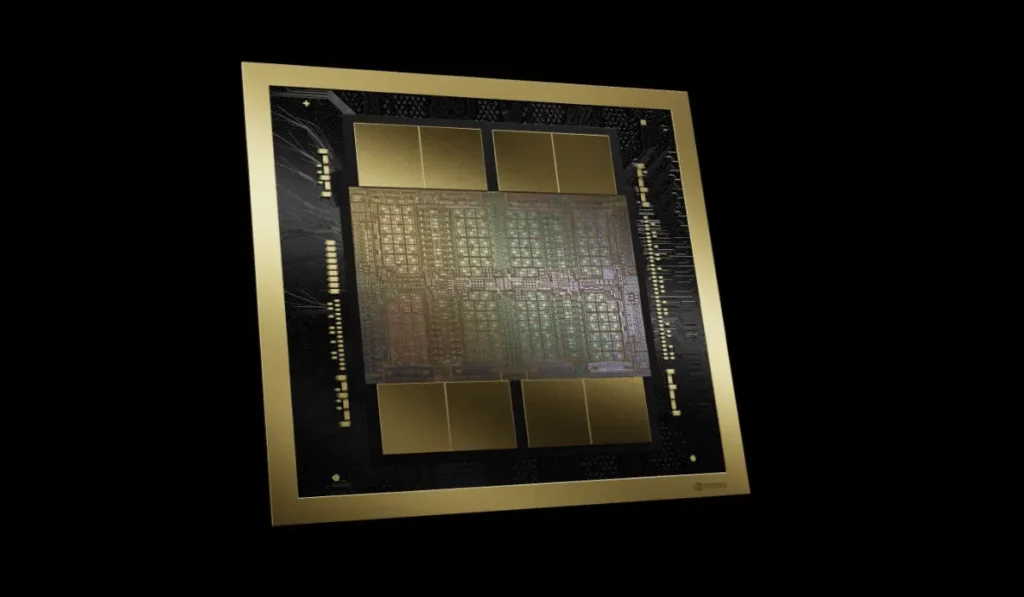

Unmatched Performance: The Power of the Blackwell B200 GPU

The Blackwell B200 GPU boasts impressive credentials, solidifying its position as the most potent chip Nvidia has designed specifically for AI applications. Packed with a staggering 208 billion transistors, the B200 delivers a phenomenal 20 petaflops of floating-point 4 (FP4) performance. This translates to significantly faster processing speeds and improved efficiency when tackling complex AI tasks.

The GB200 “Superchip”: Combining Power and Efficiency

Nvidia didn’t stop at the B200. The company also introduced the GB200, a powerhouse “superchip” that merges two B200 GPUs with a single Grace CPU. This innovative configuration is touted to offer a remarkable 30x performance boost for LLM inference workloads, while simultaneously achieving substantial gains in efficiency. Compared to the H100, the previous generation chip, the GB200 promises a significant 25x reduction in both cost and energy consumption. This translates to substantial cost savings and reduced environmental impact for companies deploying large-scale AI models.

Real-World Applications: Revolutionizing AI Model Training

The true potential of the Blackwell architecture becomes evident when considering its impact on training massive AI models. Traditionally, training a model with 1.8 trillion parameters would necessitate a colossal setup of 8,000 Hopper GPUs, drawing a staggering 15 megawatts of power. Nvidia claims that a mere 2,000 Blackwell GPUs can achieve the same training feat while consuming a significantly lower 4 megawatts of power. This translates to a drastic reduction in energy consumption and operational costs, making the development of complex AI models more accessible and sustainable.

Enhanced LLM Inference: Faster Speeds and Larger Models

The performance improvements extend to LLM inference tasks as well. Nvidia cites benchmark results using a 175 billion parameter GPT-3 model, demonstrating a remarkable sevenfold performance increase for the GB200 compared to the H100. Additionally, the GB200 boasts a fourfold boost in training speed, enabling researchers and developers to iterate and refine their LLM models at an accelerated pace.

Architectural Innovations: The Engine Behind the Power

Several key architectural advancements underpin the impressive performance gains of the Blackwell B200. A central innovation is the second-generation transformer engine, which effectively doubles compute throughput, bandwidth capacity, and the maximum size of models that can be trained. This is achieved through a shift to a 4-bit per neuron architecture as opposed to the previous 8-bit configuration, resulting in the aforementioned 20 petaflops of FP4 performance.

Another significant advancement is the next-generation NVLink switch. This revolutionary component facilitates communication between a staggering 576 GPUs, enabling the creation of massive AI clusters with an unparalleled 1.8 terabytes per second of bidirectional bandwidth. To achieve this level of communication efficiency, Nvidia developed a brand new network switch chip boasting a remarkable 50 billion transistors and an impressive 3.6 teraflops of FP8 compute power. Previously, a cluster of just 16 GPUs would dedicate a significant portion of its resources (around 60%) to communication between units, leaving only 40% for actual computations. The NVLink switch promises to significantly reduce this communication overhead, unlocking the full potential of large GPU clusters.

The Future of Gaming Graphics Cards

While the primary focus of the Blackwell architecture lies in AI advancements, its influence extends beyond this domain. Nvidia has hinted that the Blackwell architecture will form the foundation for future iterations of their RTX gaming graphics card lineup. This suggests that gamers can anticipate a significant performance leap in upcoming graphics cards, potentially ushering in a new era of immersive and visually stunning gaming experiences.

Scaling for the Future: High-Speed Networking Solutions

To facilitate the deployment of massive AI clusters utilizing thousands of GB200 superchips, Nvidia unveiled high-speed networking solutions. The 800Gbps Quantum-X800 InfiniBand and Spectrum-X800 ethernet are designed to address the communication demands of these large-scale deployments. These advancements in networking technology pave the way for the development of even more powerful and complex AI systems in the future.

A New Era of AI Innovation

The unveiling of the Blackwell B200 GPU marks a significant milestone in the evolution of AI hardware. With its unmatched performance, groundbreaking architectural innovations, and scalable solutions, the Blackwell architecture promises to revolutionize the way we train and utilize AI models. From accelerating the development of LLMs to enabling the creation of powerful AI clusters, the Blackwell architecture has the potential to unlock transformative possibilities across various sectors. As researchers and developers leverage the capabilities of this groundbreaking technology, we can expect to witness a new era of AI innovation that will shape the future of various industries and redefine our interaction with technology.

Stay ahead of the curve. Get in-depth analysis of the hottest tech and AI news at The Dogmatic, your trusted source.

The Dogmatic